Understanding MCP

Understanding MCP

Introduction: The USB-C of AI Tools

Obligatory MCP Article Disclaimer: Yes, I’m fully aware I’m the 10,437th person to write about MCP this month. Consider this my official ticket to board the hype train! 🚂 In my defense, at least I waited until May 2025 to jump on the bandwagon, which practically makes me a late adopter by AI standards. As they say, if you can’t beat the algorithm, feed the algorithm!

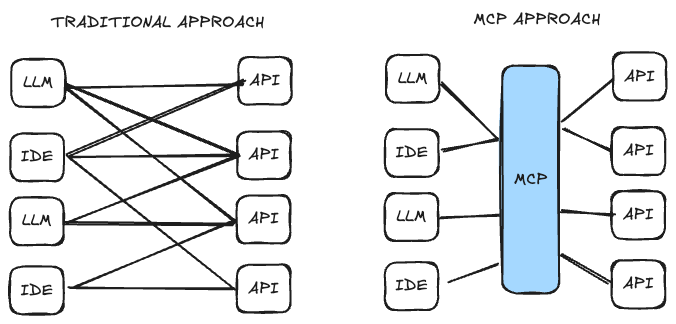

Imagine trying to connect your smartphone to your computer, but instead of just plugging in a USB cable, you have to build a custom connector from scratch. That’s essentially what developers have been doing with AI applications for years - creating bespoke integrations between large language models (LLMs) and the tools they need to access. It’s inefficient, inconsistent, and a massive drain on development resources.

Enter the Model Context Protocol (MCP) - what Anthropic (the creators of Claude) calls “the USB-C port for AI applications.” Released in November 2024, MCP has seen explosive growth in adoption throughout early 2025, with major players like OpenAI, GitHub, Cursor, VS Code, and many others jumping on board.

For developers working with any kind of external data or tooling, MCP offers particularly exciting possibilities. But before we dive into specific applications, let’s understand what MCP actually is, how it works, and what limitations we need to consider.

What is MCP, Really?

At its core, MCP is an open protocol that standardizes how AI assistants connect to external tools, data sources, and systems. It creates a universal interface between:

- Hosts - Applications that want access to external data and functionality (like Claude Desktop, Cursor, VS Code)

- MCP Clients - Protocol clients that maintain connections with servers

- MCP Servers - Lightweight programs that expose specific capabilities through the standardized protocol

In practical terms, MCP lets developers create standardized “servers” that expose functionality like:

- Tools - Functions that perform actions (like searching a database or calling an API)

- Resources - Data that an AI assistant can read (like file contents or metadata)

- Probes - Methods to discover information about a system

Instead of every developer creating unique implementations for connecting AI models to external services, only one MCP server needs to be built. Then any AI assistant that supports MCP can immediately leverage that connection.

MCP vs. Alternative Approaches: A Comparison

To truly understand MCP’s place in the AI integration ecosystem, let’s compare it with other approaches:

| Feature | Traditional Function Calling | OpenAI Plugins | Model Context Protocol (MCP) | ACP/A2A Protocols |

|---|---|---|---|---|

| Standardized Protocol | ❌ (Custom impl) | ✅ (OpenAI only) | ✅ (Open standard) | ✅ (Emerging) |

| Cross-LLM Compatibility | ❌ | ❌ | ✅ | ✅ |

| Local-first Operation | ✅ | ❌ (Cloud only) | ✅ | ❌ |

| Remote Operation | ✅ | ✅ | ✅ | ✅ |

| IDE Integration | ❌ | ❌ | ✅ | ❓ (Limited) |

| Creative Tool Integration | ❌ (Custom plugins only) | ❌ (Web-based only) | ✅ (Emerging) | ❌ (Not yet) |

| Development Complexity | Simple | Complex | Moderate | Complex |

| Ecosystem Maturity | Limited | Growing | Exploding | Early |

| Agent-to-Agent Communication | ❌ | ❌ | ❌ | ✅ |

| Binary Asset Integration | ✅ (Custom impl) | ❓ (Limited) | ✅ | ❓ (Unproven) |

Key Differences:

-

Traditional Function Calling

- Custom implementation for each LLM

- No standardized protocol

- Works well for simple integrations but creates maintenance overhead

-

OpenAI Plugins

- Limited to OpenAI models

- Cloud-based only

- Comprehensive marketplace

- More complex implementation requirements

-

Model Context Protocol (MCP)

- Open standard that works across LLMs

- Both local and remote operation

- Rapidly growing ecosystem

- Strong IDE integration

- Moderate implementation complexity

-

Agent Protocols (ACP/A2A)

- Focused on agent-to-agent communication

- Newer with smaller ecosystem

- More complex implementation requirements

- Limited IDE integration (so far)

For any external API or service integration, MCP offers a sweet spot: standardization across LLMs, flexibility in deployment options, and a rapidly growing ecosystem of tools and examples to build upon.

How MCP Works: The Transport Layer

MCP can operate over two primary transport mechanisms:

-

Standard IO (stdio) - Used for local development where everything runs on the same machine. This is simple but limited to a single computer.

-

HTTP with Server-Sent Events (SSE) - Enables remote connections over HTTP, allowing MCP servers to run on different machines than the client. This is what enables true production-scale deployments.

The choice between these transport mechanisms determines how your MCP implementation will scale and deploy, with important security implications we’ll discuss later.

The Pros: Why MCP Matters for External Service Integration

1. Unified API Access

With an MCP server for any service, any MCP-compatible AI assistant can immediately access that service without custom coding for each assistant. This means:

- Consistent experience across tools like Claude, ChatGPT, GitHub Copilot, and IDE plugins

- One-time integration effort rather than rebuilding for each AI platform

- Standardized approach to authentication and resource access

2. Enhanced LLM Capabilities

MCP allows LLMs to use external tools directly:

- Natural language data queries - “Find me the latest sales figures for our enterprise customers”

- Multi-step workflows - “Pull the user engagement metrics from last month, filter to high-value accounts, and compare with our target KPIs”

- Context-aware actions - “Update the project timeline based on the delays mentioned in yesterday’s standup notes”

3. Developer Productivity Boost

For development teams:

- Reduced integration time - Build once, use everywhere

- Simplified maintenance - Updates to the MCP server automatically benefit all connected clients

- Ecosystem benefits - Leverage the growing collection of open-source MCP servers for other tools

4. New AI-Powered Workflows

MCP enables entirely new ways of working:

- AI-assisted data analysis - “Help me understand patterns in our customer feedback”

- Intelligent recommendations - “Based on this article I’m writing, suggest relevant references from our knowledge base”

- Automated resource management - “Help me organize my project files by topic and priority”

The Cons: Challenges of MCP Implementation

1. Security Concerns

The current MCP implementation has significant security gaps:

- Limited authentication mechanisms - The protocol is still maturing in how it handles security

- Potential for data exposure - Without proper safeguards, sensitive data could be exposed

- Access control challenges - Managing which users/systems can access what functionality

2. Immature Ecosystem

Despite rapid growth, MCP is still in its early stages:

- Lack of standardized marketplaces - Finding and vetting MCP servers isn’t straightforward

- Limited production examples - Most implementations are local/experimental

- Evolving specifications - The protocol itself is still changing

3. Implementation Complexity

Building robust MCP servers requires:

- Advanced state management - Especially for HTTP transport mechanisms

- Cross-request correlation - Maintaining context across multiple interactions

- Error handling edge cases - The specification doesn’t fully address all scenarios

4. Vendor Lock-in Risks

While MCP aims for standardization, there are risks:

- Competing standards - IBM’s Agent Communication Protocol (ACP) and Google’s Agent2Agent (A2A) offer alternatives

- Custom extensions - Vendors may extend MCP in proprietary ways

- Implementation divergence - Different platforms may implement MCP differently

Limitations of MCP for External Service Integration

1. Access Management Challenges

MCP doesn’t directly address:

- Rights management - How to handle varying levels of access privileges

- Billing integration - Connecting payment systems for paid APIs

- Usage tracking - Monitoring rate limits and quotas

2. Domain-Specific Limitations

The protocol is generalized, not specialized:

- Limited handling of specialized data types - No standardized approach for domain-specific data

- Metadata constraints - No specific provisions for rich metadata structures

- Binary asset transfer - The protocol is primarily designed for text-based interactions

3. Performance Considerations

For large-scale service integration:

- Bandwidth constraints - Especially relevant for data-heavy applications

- Caching mechanisms - Not fully addressed in the specification

- Search optimization - No protocol-level support for domain-specific search optimizations

4. Integration Depth

Current MCP implementations typically provide:

- Basic functionality - But may not expose a service’s full capabilities

- Limited parameter options - May not support all advanced parameters

- Simplified metadata - Often lacking the complete richness of direct API access

Real-World Application: External Services + MCP

What might an MCP server enable for various services? Here are some practical use cases:

-

AI-Assisted Data Analysis

- A data scientist working in Jupyter Notebook with an MCP-enabled assistant could say “Pull the latest user engagement metrics and visualize the trends” and get relevant results directly in their environment.

-

Content Creation Workflows

- A content marketer writing in an MCP-enabled IDE could ask “What insights from our customer surveys would support this point?” and have the AI suggest appropriate quotes and statistics.

-

Development Workflow Integration

- “Show me which of our microservices are affected by this API change” - the AI could analyze your codebase and deployment infrastructure to identify impacts.

-

Knowledge Management

- “Find all internal documents related to our product roadmap for Q3” - enabling better organization and retrieval of information across the organization.

Building an MCP Server: Considerations

If you’re considering building an MCP server, you’ll need to address:

-

Authentication Flow

- How will you handle API credentials securely?

- Will you support different authentication methods for different environments?

-

Feature Scope

- Which API endpoints will you expose?

- How will you translate natural language queries into API parameters?

-

Deployment Strategy

- Will you run the server locally or deploy it remotely?

- How will you handle scaling for multiple simultaneous users?

-

Security Model

- How will you prevent unauthorized access?

- How will you handle rate limiting and usage tracking?

The Future: Could MCP Replace Custom Integrations?

A significant portion of developer time today is spent building custom integrations between systems - whether through APIs, SDKs, or more hacky approaches when official methods don’t exist.

The question arises: Could MCP offer a more elegant alternative to these custom integrations? After careful consideration, I see several important distinctions:

MCP vs. Custom Integrations: Different Problems, Different Solutions

Custom Integration Characteristics:

- Tight Coupling Focus: Custom integrations often create tightly-coupled systems

- Specialized Implementation: Built for specific use cases and requirements

- Fragility: May break when APIs change their structure

- Maintenance Burden: Each integration requires ongoing maintenance

- Technical Debt: Often accumulates as systems evolve

MCP Characteristics:

- Standardized Interface Focus: MCP provides consistent interfaces to systems

- Cooperative Relationship: Requires explicit implementation by service providers

- Stability: Based on a defined protocol that evolves deliberately

- Unified Maintenance: Updates to the MCP server benefit all clients

- Technical Implementation: Uses a standardized JSON-RPC protocol

When MCP Could Replace Custom Integrations

MCP isn’t a direct replacement for all custom integrations, but rather a potential evolution of how systems connect. MCP could replace custom integrations in scenarios where:

-

Service Providers Implement MCP Willingly: If a service creates an official MCP server, developers would have an easier path to integration.

-

AI-Assisted Interactions: For uses like “find me relevant data,” an MCP server could provide better results than rigid API calls.

-

Cross-Platform Workflows: When the goal is to enable AI assistants across multiple platforms to access the same functionality.

When Custom Integrations Remain Necessary

Custom integrations will continue to be necessary when:

-

High Performance Requirements: For systems requiring extremely low latency or high throughput.

-

Complex Business Logic: When integration requires extensive transformation or business rules.

-

Legacy System Integration: For connecting to systems without modern API capabilities.

Conclusion: Is MCP Ready for Production?

MCP represents a significant advancement in how AI assistants interact with external systems. While still evolving, it offers compelling benefits for organizations looking to standardize their AI integrations.

For developers, MCP creates exciting possibilities for AI-assisted workflows, data access, and tool integration. However, production implementations should proceed with caution, particularly around security considerations.

As we’ve explored, MCP isn’t a replacement for all integration techniques, but rather a complementary approach that could reduce the complexity of connecting AI systems to external tools. The protocol’s real promise lies in creating standardized, intentional connections between AI systems and the services they need to access.

In Part 2 of this series, we’ll dive into a practical example of building a local MCP server that integrates Claude and ChatGPT with a real-world API, demonstrating how developers can experiment with these capabilities in a controlled environment.

Notes mentioning this note

There are no notes linking to this note.